In a attempt to create a ('huge') data pool for backups, I've been keeping an eye out for a new server. I kinda have a figure of €400 in my head that I'd like to have as a maximum budget for doing this. My criteria for this server was the following;

- Low power consumption

- 8 x LFF 3.5" drive bays

- As always, cheap to buy!

These reasons are pretty much self explanatory, with maybe the exception of the second point? Let me briefly explain. SFF (2.5") hard drives are mainly used for smaller capacity hard drives when compared to LFF (3.5") hard drives. SFF bays are primarily used with SSD's these days. I think the average 'maximum' capacity SFF HDD is 1.2TB or there abouts. Obviously multiplying the drive capacity by the number of drive bays will give you the total capacity of the system. SFF is generally a smaller capacity, but faster speed array whilst LFF is slower but increased capacity.

For a long while I was looking into buying a HP's 8th generation, DL3x0 server. I had previous experience with a 6th gen DL360 so it would basically be an updated version of that. However these are mainly SFF servers (although you can, and I did find a couple of LFF variants). Ultimately what put me off this HP server was it's processor(s) power draw. It wasn't signifyingly high, don't get me wrong and it was lower than the 6th generation server I had previously. I had read that the AMD processor machines consumed up to, a third less power. We'll be able to look into this in details later on once the server is up and running.

The front of my new server, arrived with all caddies (no HDDs) and the front bezel.

Inside the Dell Poweredge R515 server

Cost so far, Dell PowerEdge R515 server, 8 x 3.5" drive caddies, H700 PERC controller, shipping from Bulgaria, total all in cost of €228.49. Yes, I agree that doesn't seem to fit my criteria 'Cheap to buy'. Lets take a quick look into that. €8.59 (x8) is the cheapest Chinese 3.5" caddy clone available on eBay (deduct €68.72 from servers cost = €159.77). Server's are heavy, these are not cheap things to send in the post!! (deduct, let's say €30 for postage = €129.77). Even at €130 I'm feeling that's not a bad price for a server?

O.K. So you've got your hands on your new server.. what next? The first thing that I did was to remove the 4 x 4GB PC3 ECC memory and replace it with 4 x 8GB PC3 ECC memory, doubling the servers RAM from 16GB to 32GB. Then I wondered, would the server take those 4GB DIMMs in addition to the 8GB DIMMs I just installed.. and the answer is yes but there is a 'but'!

I found the memory to be a bit of a pain, not the installation, that was fine, just the servers actually operation of inserted memory that was a little strict. For instance 2 CPUs need all the white memory slots populating (2 slots per CPU) with identical memory. If you only install into the first slots (A1 and B1) it'll annoy you with the message 'Memory is not optimal' on boot and ask you to press F1 to continue or F2 for setup. In the end I populated all the white memory slots with 8GB DIMMs (4*8=32GB) and all of the black memory slots with 4GB DIMMS (4*4GB=16GB), again getting the 'Memory is not optimal' warning. So by removing the 4GB DIMMS and partially loaded 2x8GB DIMMS in A3 and B3 respectively.. again facing the 'Memory is not optimal' message.. You can work around this by disabling keyboard errors in the BIOS. I did this but later started to wonder if it would have performance issues or not, then for the sake of me order another 2x8GB DIMMs, I would end up with 64GB of memory and no annoying BIOS messages and also not having the need for the disable keyboard work around, so everything will be 'as intended'.

In the system BIOS you can disable the keyboard prompt on error

Cost so far, 2 x 8GB DIMMs €28 bringing the total to €256.49 (64GB system memory)

This server came to me with a H700 PERC controller, obviously this is a RAID controller would be no good for HBA / JBOD mode that is ultimately what I'm looking to utilise for my ZFS array using TrueNAS. I've ordered a Dell H200 card which I'll reflash with the BIOS from LSI's 9211-8i once it arrives.

H700 PERC controller removed AND server's LED panel reporting this occurrence

Cost so far, H200 controller card €32 brings the total to €288.49.

Things are certainly starting to add up on this server build and I've still no storage, let's address that now. So I was looking to install four drives, leaving four bays free for future expansion. I've heard stories about a 2TB drives being the worst you could possibly buy in terms of reliability but for the life of me, I couldn't remember what brand they were talking about? I didn't really over think this, I didn't bother to research it any further. Instead I put it down to, it's likely a particular brand and not a general rule of thumb (I hope) also I came across five 2TB HGST drives for €79.97 so ordered these and kept my fingers crossed! The idea being here, I can install four drives and have the fifth drive as a spare. In order to do this properly (correct cooling airflow inside the server) I would also need to purchase four drive bay 'blanks'. These are used to block the air coming in, in turn this forces the air to come in through an actual drive bay thus cooling the drive.

Cost so far, 5 x 2TB HDDs €79.97 + 4 drive bay blanks €13, brings the total to €381.46.

The final piece of the puzzle that we need to discuss is the operating system, I've mentioned previously that I intent to run TrueNAS (TrueNAS Core to be specific) and although I could be tempted to virtualise this installation of TrueNAS under a hypervisor such as ProxMox, I don't think that I'm going to. Primarily this server will be backups of everything that I have and use. I feel this is a good enough purpose for this server without diluting it and using it for other purposes. I'm even intending to eventually locate this server in a different building so it'll also count as 'off site' backup as well. (located in the garage vs my home!)

Obviously the operating system itself has to be stored somewhere and it cannot be connected to the main storage array, solutions? I've an idea for now, but this may change in the future. My initial idea is to run TrueNAS from a USB stick, plugged into one of the two internal USB slots inside the server. As a backup I'll actually mirror the boot USB and keep one as a spare in the drawer. This is an integrated feature in TrueNAS (and has been since the days of FreeNAS - How to video here). If this idea doesn't work out then my change of plan, will be to use a USB to SATA converter plugging into the internal USB slots and either a HDD or a SSD. Obviously the USB stick idea will be more cost effective than a SSD!

An example of my fall back solution

Hopefully my grand total cost, 2x8GB USB sticks €12.99 bringing the grand total to €394.45.

LETS GET STARTED - BIOS Update

System BIOS was previously v2.0.3 with the latest available on Dell's website being v2.4.1 - interesting note here is the default download is 8,793kb (nearly 9MB) which is rather large for a BIOS I thought? It is, in-actual fact the installer, the BIOS flasher and the whole software bundle for doing this inside of Windows 10 64bit. As I currently have no access to internal hard disk drives this clearly wasn't an option. Well it kinda could of been if I went down the lengthy path of attaching a USB hard drive and installing Windows 10 onto that, however instead, I choose to create a FreeDOS bootable partition on a spare USB memory stick using Rufus. On Dells BIOS download there is an option 'other formats' which will take you to a popup listing a smaller .exe file and a .pdf file. The PDF basically explains the large .exe is for 64bit Windows and the smaller .exe is the DOS BIOS updater. So then I simply copied the smaller .exe on my newly make bootable Free-DOS USB stick, booted and updated the BIOS in a fraction of the time.

With the BIOS updated I started to wonder if there was any other things that I could update, firmware perhaps? I remember in the past (with that older G6 HP server I had previously) I downloaded an AIO .ISO updater boot disk from HP that scanned my system and updated everything that it could find. Surely there must be such a thing for a Dell system?

Again utilising Rufus to create a bootable image to get this image onto the server. However, maybe I downloaded the wrong thing? or maybe I blatantly just don't understand this updater (it's far from automated) and when selecting the USB as the repository.. computer says no.. I couldn't really figure out what I needed to do. I even tried entering downloads.dell.com as a CIF/NFS share because I read somewhere that Dell have retired their FTP server. Putting it simply, I just gave up!

The keen eyes may of spotted I had the server running from a digital power usage meter, I've already said we'll be using that to do some power tests once the server is fully operational but as a teaser, here's the power reading whilst attempting to update the firmware.

87.8W.. Wow

UPDATE : So I am updating this on the 1st of June, my two sticks of 8GB RAM have arrived, were installed without issue and are working perfectly.

In addition, the H200 controller card has also arrived. I removed the end plate and installed it exactly where the previous H700 controller card was located.

Like for like comparison (H200 top, H700 bottom)

However upon powering on.. Huston, we have a problem!! Now admittedly I haven't done anything with card (no reflash as yet) as I just wanted to test to see if it was working or not for eBay feedback purposes.

A quick search later and I came across this useful video by 'Art of Server' which basically told me that the server is looking for a specific tag from the hardware installed into that particular 'internal' slot. In this case the server doesn't find that particular information tag, so just refuses to boot.

Sounds a little drastic admittedly AND also complicated but I'll try to make it a little easier to digest. Basically I needed to change the hardware tag in the controller cards firmware. Firstly by downloading it, then editing it and then reuploading it is the simplest way I can explain it.

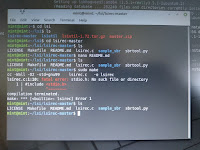

To do this I needed an operating system, plus then I needed to get files from the internet onto this 'temporary' operating system in order to achieve all this with the controller card... Linux Mint to the rescue! I happened to have a burnt disc of Linux Mint laying around but if you don't have one of these you could always download a copy and use Rufus again to make a bootable USB like we've done before.

The solution, initially, is to move the controller card into another slot (like one of the regular slots near the back of the server) now its only a temporary move and cannot stay here because the SAS leads will not reach. If your leads reach and if your happy enough leaving your controller near the back then you can skip this next segment.

Modifying the H200's hardware tag:

- Boot in Linux (which ever distro you like) as I said previously, I used a burnt disc of Linux Mint.

- We need to Install build tools:

# apt install build-essential unzip

- Compile and install Isirec and Isitool:

# mkdir lsi

# cd lsi

# wget https://github.com/marcan/lsirec/archive/master.zip

# wget https://github.com/exactassembly/meta-xa-stm/raw/master/recipes-support/lsiutil/files/lsiutil-1.72.tar.gz

# tar -zxvvf lsiutil-1.72.tar.gz

# unzip lsirec-master.zip

# cd lsirec-master

# make

(If 'stdio.h' error here install # sudo apt-get install build-essential)

# chmod +x sbrtool.py

# cp -p lsirec /usr/bin/

# cp -p sbrtool.py /usr/bin/

# cd ../lsiutil

# make -f Makefile_Linux

- Modify SBR to match an internal H200I, to get the bus address:

# lspci -Dmmnn | grep LSI

0000:05:00.0 "Serial Attached SCSI controller [0107]" "LSI Logic / Symbios Logic [1000]" "SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] [0072]" -r03 "Dell [1028]" "6Gbps SAS HBA Adapter [1f1c]"

Bus address 0000:05:00.0 (In my own case it was 0000:02:00.0)

We are going to change id 0x1f1c to 0x1f1e (Again it was 0x1f1d to 0x1f1e for me)

** Disclaimer - Your bus address and id's could be different, make sure you use your own details **

- Unbind the card:

# lsirec 0000:05:00.0 unbind

Trying unlock in MPT mode...

Device in MPT mode

Kernel driver unbound from device

- Halt the card:

# lsirec 0000:05:00.0 halt

Device in MPT mode

Resetting adapter in HCB mode...

Trying unlock in MPT mode...

Device in MPT mode

IOC is RESET

- Read SBR:

# lsirec 0000:05:00.0 readsbr h200.sbr

Device in MPT mode

Using I2C address 0x54

Using EEPROM type 1

Reading SBR...

SBR saved to h200.sbr

- Transform binary SBR to (readable) text file:

# sbrtool.py parse h200.sbr h200.cfg

Modifying the hardware tag

Modify PID in line 9 (e.g using vi, vim or nano):

from this:

SubsysPID = 0x1f1c

to this:

SubsysPID = 0x1f1e

Important: if in the cfg file you find a line with:

SASAddr = 0xfffffffffffff

remove it!

- Save and close file.

- Build new SBR

# sbrtool.py build h200.cfg h200-int.sbr

- Write it back to card:

# lsirec 0000:05:00.0 writesbr h200-int.sbr

Device in MPT mode

Using I2C address 0x54

Using EEPROM type 1

Writing SBR...

SBR written from h200-int.sbr

- Reset the card:

# lsirec 0000:05:00.0 reset

Device in MPT mode

Resetting adapter...

IOC is RESET

IOC is READY

- Info the card:

# lsirec 0000:05:00.0 info

Trying unlock in MPT mode...

Device in MPT mode

Registers:

DOORBELL: 0x10000000

DIAG: 0x000000b0

DCR_I2C_SELECT: 0x80030a0c

DCR_SBR_SELECT: 0x2100001b

CHIP_I2C_PINS: 0x00000003

IOC is READY

-Rescan the card:

# lsirec 0000:05:00.0 rescan

Device in MPT mode

Removing PCI device...

Rescanning PCI bus...

PCI bus rescan complete.

- Verify new id (H200I):

# lspci -Dmmnn | grep LSI

0000:05:00.0 "Serial Attached SCSI controller [0107]" "LSI Logic / Symbios Logic [1000]" "SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] [0072]" -r03 "Dell [1028]" "PERC H200 Integrated [1f1e]"

Finally you can now shutdown and move the card to the 'dedicated internal' slot and your server will now boot once again!

Front hard drive bay:

With the H200 controller card installed exactly where I wanted it, I could utilise my front hard drive bays for the first time. My only problem? I only have two spare 1TB SATA drives, thankfully servers (and I'd imagine it's most of them) will happily take SATA drives in addition to their own native SAS drives. So I chucked in two drives to run a brief test with.

That second picture (above) is showing the two drives being detected upon boot by the H200, later however I decided to load up the configuration (see below) and disable the BIOS boot support (so it doesn't show the drives) - it saves boot time and its not necessary anyway, choosing the boot support option for 'OS only'.

I 'burnt' TrueNAS Core onto my USB with.. you've guessed it.. Rufus once again. Booted from a USB stick and just to remind you, my 2 x 8GB USB's are still in the post and haven't been delivered yet. So I'm testing using my 'fall back' method (An internal USB to SATA connector and a spare 2.5" HDD to act as the internal drive to me to install an operating system with). I'm sure that I don't need to show you the really simply installation process. It was my first time using TrueNAS and very simple to install and use.

Create a storage pool, I let TrueNAS analyse and recommend what to choose. Assign a user, enable your particular sharing protocol (in my Windows case it was SMB), mount the network drive and literally you're up and running. Of course there are a whole host of other, more detailed options but those are for another day. Today was just a test.

Mirrored network drive hosted on TrueNAS

I'm afraid that's all I've got for you on this instalment, I'm now waiting for parts to arrive in the post (2x8GB memory, HDDs, HDD blanks) but don't worry.. I'll let you know and we'll continue this journey together! Thanks for reading ;-D updating soon!

TO BE CONTINUED..

No comments:

Post a Comment