I've always been intrigued with the idea of buying a network server, I just don't know exactly why? Maybe it's the idea of having something that I've never owned before? Some computing hardware that I've been unintentionally neglecting? Maybe it's because I have a subconsciousness need for the benefits and feature that it could provide me with? Website hosting, home automation, Plex server, dedicated video processing unit.. the list goes on! Maybe it could be because I want to learn more about servers and there is no better way than owning one for yourself?

I had previously already snapped up a bargain and got my hands on 8 x 146GB 2.5" SAS (Network Server) hard drives for a few pounds (£3.04). However I was unsure exactly what to do with these as I had no way of accessing a SAS drive. Ironically SAS controllers can access SATA connectors but unfortunately not vice versa.

Additionally I had already made a mistake in the past. Buying some DDR3 ECC registered RAM for a desktop computer that I would be converting to a Xeon processor and hence, would of taken the cheaper ECC memory. In fact I should of been buying DDR3 ECC Unregistered RAM. I was completely unaware that there were two types of ECC memory and I just presumed ECC memory was all the same (i.e. intended for network servers) but hey, we all live and learn!

Whatever the reason was for wanting a network server when I saw this eBay listing I just couldn't resist!

So now I own a network server! This post will be dedicated to all about this network server and my trials and tribulations. I will update this post every time something new happens, so bear with me as this post could have frequent updates!

I guess I should start in telling you a little bit about this network server. It's a seventh generation HP Blade 460C. Full specifications of the 7th Gen or 'G7' can be found here:

https://support.hpe.com/hpesc/public/docDisplay?docId=emr_na-c02535780

Interestingly there is NO mention of Xeon E5530 CPU's in those official specs so these are probably an after thought and not a factory fit option. Spec wise the E5530's don't look that bad? I mean, I'd rather have these two CPU's fitted than having NO CPU's fitted! Potentially in the future I may upgrade the CPU's to the low powered, SIX cored L5640.

https://ark.intel.com/content/www/us/en/ark/products/37103/intel-xeon-processor-e5530-8m-cache-2-40-ghz-5-86-gt-s-intel-qpi.html

As you can see from the listing it didn't come with RAM, HDD's or HDD caddies. So because I already had the HDD's and the RAM in my parts bin, I just needed to get my hands on a pair of caddies.

Once I had ordered the caddies, I started researching online about the 460C server. How was I going to power it on? There are plenty of server PSU's on eBay but which one would work? At the time I just didn't know. Now I do, virtually all of the server PSU's are all 12v (although you can mod them to get more voltage out of them, 13.8v is common but unnecessary for this project.).

The next problem was that there are none of the usual external connectors on the Blade server! Yes there is a unusual connection on the front of the server and the large square connect on the rear of the server (that mates with the official chassis), so I needed to do a little more research! The answer is that there is a HP dongle which connects into the front connection and gives two USB ports, a VGA adaptor and a serial port. The HP Part number for this is 409496-0001 / 416003-001 and you will need one in order to be able to do anything with your server.

Additionally I had no idea that the majority of network servers will NOT work outside of their official server cabinet. These server cabinets are namely very large, housing multiple servers and they cost thousands so getting one is totally NOT an option! Luckily the majority of servers will have a hidden selection of DIP switches and by researching your particular server model you should be able to find exactly which DIP switches you need to change to get your server working outside of the official server cabinet.

In the case of the 460C Blade server, changing DIPs numbered 1 and 3 from their OFF positions to their ON positions will do the trick. Here's an image of the location of these DIP switches on the G7 460C..

As you can see from the information panel on the right, they don't fully mention even half of the DIP functions! Obviously they don't want you to do this!! ROFL.

Another useful spot on that diagram is item #12, the on-board USB header. I have my own plans for that. Thinking ahead to when the server is fully setup, running headlessly somewhere in my home. I don't want to have to keep the HP dongle installed to get a network connection. I'd much more prefer to extend that internal USB header to the outside of the chassis. So that's exactly what I've done, here a few photos, let me walk you through it.

Firstly we need to open up the server and locate the internal USB header.

Buried under the drive bays, there it is, we've found it! So now I'm looking for an old donor USB cable and somewhere I have a circuit board to USB socket lead I salvaged out of a TV decoder box a few years ago..

With the two USB cables cut to length and soldered together I can decide where will be a good place to located the external USB connection.

I decided to install the USB connection just above the SD card slot. It's still low enough to not obstruct the drive bay area and looks in keeping with the other external opening. Before going any further it's time to mask up the server to protect it from any tiny particles of metal which will be generated as soon as the Dremel gets going.

All measured up and masked up, it was time to start grinding... sometime later...

Probably the hardest part of doing this was gluing the socket into place (Hot glue gun). It didn't go exactly where I wanted it to go (i.e.central) but hey, it's good enough for me! [Later this gets revised for a screwed in USB connection]

However the theory is perfect, once this server is fully configured, up and running and living on a shelf I won't have to leave the front HP dongle attached to access a USB slot. The USB to RJ45 network dongle is now attached to the internal USB header via my extension.

While I had the server out I remember (somewhere) having a banana plug lead which went to an XT connector, luckily for me I just found it. This will be part of the next segment of Project Blade, the PSU. Stay tuned until then!

UPDATE - 15/10/20 : So today I got a fan through the post, the dimensions of it are 100mm x 100mm x 15mm which I hope will be sufficient to extract the air through our server case? I had previously mocked up a folded piece of paper attempting to try to figure out what size of fan I could use. Noting motherboard obstructions and positioning. Here's the new fan..

|

Using electrical tape to 'guesstimate' it's future position..

However by doing this I discovered that I had a problem...

So looking at the problem in detail I could see that the highest points inside of the case were..

Causing the fan to be in a position which was slightly to high (vertically) for the case to close. Admitted I could of removed the battery holder which is located above the mezzanine card. Instead I decided it could be useful to support the fan should the silicon break(?), so I just bent the side of it over allowing sufficient clearance.

Therefore a little 'tinkering' was needed! I came to the conclusion that if I shaved off the four bottom corners of the fan, and I cut a full hole in the top of the case then the fan would 'drop down' into the hole and would remain slightly above the top of the case and thus giving me those extra few millimetres than I needed for clearance.

Just like that, the fan dropped into place! As if it was as easy as that!! Here's the profile views on the fan once it was finally in it's place. Pretty good I thought!

Now, it was just a case of holding the fan in it's place. I thought about drilling holes and using fan screws to hold the fan in place. However, an additional concern of mine was to seal the gaps around the fan as well. I had intended to use my hot glue gun to seal the fan in place but then I had a brain wave! If I was to use silicone instead, and if the fan ever needed replacing.. then the silicon would pull out in one single lump and also it would provide an airtight seal. So.. silicone was used!!

Admittedly it's not great (flashback to the USB port anybody?!) but you'll never see the underside and it'll certainly do the job. Also now there is no need for the fan screws either.

How is the server looking now you may ask? Here's a few pictures.

As you can see from the left picture, we have 3mm of fan clearance. This enables the top case to be fitted and removed as it was originally intended. The fan itself site 3mm above the top case, so again that's pretty good in itself.

The next things which need to be addressed are powering the fan, which is a 12V fan. I intend to utilise a second set of Banana leads (coming in the post) and plug in directly to the servers second set of Banana lead plugs.

The left pair of Banana plus will be for the fan, whilst the right pair of Banana plugs will be for the power supply (also in the post). Both sets of Banana plugs are wired together and into the motherboard which is also 12V so this should be as simple as that. Of course, we will find out in our next instalment! Thanks for reading thus far :-)

UPDATE - 15/11/20 : So welcome back my interested readers! What's been going on in my world of Project Blade since last month you may ask? Quite a bit actually! Let me catch you up..

I was poking around in my spares box when I came across an old fan guard, thinking about that logically.. yes it certainly would be a good idea to temporarily fit that to the servers newly mounted exhaust fan. Even if it would initially be secured only by Blue-tact! (later a few dabs of hot glue)

The power supply arrived, in the end I went for a 750W HP server power supply, HSTNS PL18 (which is available using any of these HP part numbers, 506821-001, 511778-001 or 506822-101) I got this for the bargain price of £7 (yes £7!).

In terms of getting the power supply to automatically switch itself on (once it has power) I referred to the internet of many things and in particular a RC forum who showed it can be as easy as soldering a wire from ports 33 to 36. Other internet sources have also shown soldering a 1000 Ohm resistor across those same ports however I didn't have a resistor so the wire worked well for me. After modifying the server power supply, this mean that as soon as the wall socket was plugged in and switch on then the server power supply would be live and would automatically power up. It will also stay live until it's switched off at the wall.

As you can see from my own shots, after soldering I used my hot glue gun to ensure the contacts remained securely in place and I also used a couple of cable tie bases to ensure that the wire strain doesn't dislodge them.

The 12V top mounted fan is connecting directly into the bullet connectors meaning if the power supply is live then the fan is on (even if the server is not) and with that I took the plunge and powered on the servers power supply.

At this point the server itself has an orange/amber light in the middle below the the two drive bays. If the server is left alone, then it will automatically turn green after a few minutes and then begin its boot process. However you can also press the amber light (which is actually a button) to get it to turn green instantly and begin the boot process a bit sooner. The boot process of this 7th generation Blade server will take at least three minutes and thirty seconds so don't be expecting a fast POST!

Bearing in mind that I had second hand SAS drives installed and I didn't know if they were working / defective / full of data or blank! I booted from a USB thumb drive and installed Windows 10.

It was at this point that I discovered that I had no internet connection. My SMC EzConnect RJ45 Network connection to USB proved itself to be obsolete. Apparently you cannot get a driver for it that is any newer than Windows XP! So with a newer Windows 10 compatible RJ45 to USB ordered from China, thanks again eBay we proceeded with a borrowed Wireless network adaptor borrowed from my Raspberry Pi.

I was interested to see what CrystalDiskMark would make of the SAS drives, I was kinda surprised at their overall speed. Bearing in mind, this was a single disk being tested, no RAID was setup at this point.

After that was done I installed Speedfan just so that I could get an idea of the internal temperatures and I am so glad that I did. Core temperatures were sitting in the high 70's and low 80's which was alarming.

I realised that I need to get the server 'buttoned up' so that the fan had to draw air in from the front of the chassis, through the hard drives, RAM and CPU's. For this I made a small wooden back-plate.

Then I basically used that silver parcel tape to cover all of the holes (yee-haw!). Airflow was better but it had only really dropped to the high 60's. The CPU itself has a stated maximum of 76 degrees so that wasn't allowing much headroom. Things just HAD to change!

So once again I raided my parts bin, hoping that I had some kind of adjustable fan controller? The thought being that if I could fit that to the server then I could hopefully turn the fan up and get it to draw more air through and ultimately lower the temperatures that I was seeing.

Above you will see that I had to alter the fan connections slightly, now the positive goes through the fan speed controlling dial and then onward to the fan. The result however wasn't what I had hoped for, I had hoped that the fan wouldn't of originally been running at 100% however it was. All the fan controller would do is to slow the fan slightly or stop the fan. So I needed to rethink my actual fan I was using.

I changed the base plate slightly and passed the fan wires directly through. I have a few smaller (and much noisier) fans but obviously I've already cut a fairly big hole so I also made a plastic template and had to use plenty of tape.. this server is turning into something primarily held together with silver tape! That will be addressed in the future!

I also found a BIOS setting 'Optimal cooling' under processor management which basically under clocks the CPU from 2.4GHz to 1.6GHz. With that change made in the BIOS and the newer higher RPM fan installed my core temperatures are in the high 40's and low 50's which is much better. The negative to this is that now it SOUNDS like I own a server!! This is something else that will need refining, but for now I'm happy to continue on this journey.

I went through the seven 2.5" 136GB SAS hard drives, checking them, seeing if anything was installed and basically attempting to understand how the P410i controller card worked. One of the hard drives was dead, but the other six were working fine. All were empty and all had a variety of different amounts of logical drives assigned. I was confused.

Let me share the knowledge that I've learnt with you. Even if a SAS hard drive is empty your RAID card retains the information relating to the previous hard drive array that was installed. Thus in the photo above you will see that one of those drives, once installed singularly, was originally part of 6 logical drives. To resolve this basically it's a case of pressing F8 and deleting all of the previous logical drive settings.

I realised this a little bit too late (I had already installed Windows 10) and I certainly didn't want to reinstall Windows 10. So I circumvented that process by using Macrium Reflect Free to take a image of the windows partition and saved it external to a removable hard drive. Then when I inserted two cleaned SAS hard drive and booted creating a RAID 1+0 array (striping for slightly increased performance and duplication for data integrity) I could image the windows partition back across and I didn't lose any data. It was quite quick as well taking under 12 minutes.

Comparing the CrystalDiskMark's earlier scores I re-ran the benchmark to see what kind of performance boost I had received from creating the logical drive and RAID array.. I nearly fell off my chair!

In terms of read speeds, initially it was 80.07 and went up to 134.24 (a 168% increase). Initial write speed of 78.76 went up to 111.12 (141% increase). WOW!! definitely worth doing :-)

Whilst I was doing all of this I came across a rather annoying glitch, pictured below. I don't think it was anything associated with what I was doing, just more of a coincidence really I think?

1783-Slot 0 Array controller is in a lock-up State due to a hardware configuration failure. (Controller is disabled until this problem is resolved)

Luckily this isn't as quite as serious as it may sound, it's just a case of opening up the server and going under the drive bays at the front to remove the RAID controller card for 10 minutes or so, additionally unplugging the battery from the RAID controller card as well.

Whilst I was back under the hood of the server, I had be meaning to address my hot glued USB side port which had fallen off internally. Whoever said using hot glue would of been a good idea?! hahaha!

Back in Windows 10 and it was time to take a quick look at the system memory. If you remember I had previously (incorrectly) purchased some EEC registered RAM and never before been able to use it. Here's what CPUz says about the memory..

What else was left to do. Now that the cooling was a lot better I wanted to run Cinebench and stress the CPU's and see what kind of a result this server is cable of getting. I went back into the BIOS and removed the underclock so that the CPUs were no longer restricted to 1.6GHz.

I fired up Cinebench and ran it twice. The best score I got was 790 and to be honest, I didn't really know if this was a good thing or a bad thing! I also took a quick note of the power draw whilst the server was under full load (194W). The full load Speedfan Core temps maxed out at 61 degrees, perfect I thought.

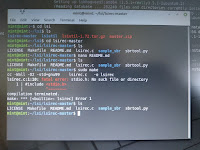

That was pretty much all of the system related checks over with. Now what am I actually going to do with this server?! I decided to install Ubuntu Server on one of the spare hard drives. Unfortunately I didn't really get any further than downloading the image, installing the image onto USB and making it bootable. Once inside my new Ubuntu Server operating system I discovered that the Raspberry Pi's Wireless dongle is rather problematic to get working. I decided to wait until my wired connector arrives.

In the meantime I will continue to use Windows 10 and just experiment with a few game servers. My son has already installed a Minecraft server. He says that "it can be a little laggy for a minute or so until that clears and then its fine again for an hour or so". I haven't looked into this yet because it running far from optimally. I'm guessing that lag should hopefully be gone once the server has been hard wired.

So now is a good time to pause this update and prepare you for the future adventures of Project Blade!

- 24GB of EEC Registered DDR3 has been ordered, although pictured in that auction was 48GB so I'm keeping my fingers crossed that the latter amount will arrive!!

- 2 x Intel Xeon L5640 (SLBV8) 2.26GHz 6-Core CPU's have been ordered.

- a 3 Port Mini Hub USB 2.0 has been ordered, the thinking here is for when the server is running headlessly it will be handy to have a few more USB ports.

QUICK UPDATE - 25/11/20 :

As it turned out, I was right and bagged myself 48GB of DDR3 ECC memory (instead of the advertised 24GB - so that was a fantastic WIN!) especially when the cost was €25 including shipping!!

Memory went in without a hitch, you just need to remember that each CPU has three channel memory, the closest two slots are Channel 1, the furthest away pair of slots are Channel 3 therefore the two slots left in between are Channel 2.

Each of my Processors now has 32GB of memory that it can access (2 CPU's = 64GB RAM)

After the memory was correctly installed I then decided to tackle the new CPU's (I advise against installing all of your new parts (CPU and RAM) at once in terms of troubleshooting, just do them one at a time). So the heat-sinks came off in double quick time, old quad core'd E5530 CPU's came out. Newer six core'd L5640 (Low Power) CPU's went in, a quick cleanup and thermal paste and it was time to switch it on again!

Excuse the Blue hue to that photo, I had swapped over VGA leads and that particular lead IS that bad unfortunately. Still, not to worry as the server will be running headlessly from now on. It's up and running with Windows 10 (until my newer RJ45 to USB adaptor arrives) then we'll be going down the Ubuntu route.

Just for a quick comparison I fired up Cinebench and hoped to do a like for like benchmark to compare with my 1st Cinebench. I was actually doing this offline with no internet connect (my son had 'borrowed' my WiFi dongle) and I just clicked on the search icon, typed "Cinebench" and then clicked it. It wasn't until I ran both benchmarks that I realised that it was a completely difference version of Cinebench!! - I will run the exact same version of Cinebench that I did earlier for a better comparison next time I fire up the server. Until then you'll just have to make do with the results from this particular version of Cinebench!

Excuse the quality of the above image, I screen grabbed it from the server running VNC, hence why I've written the 'points figures' in case you can't make them out!

So, whats coming up in the future?

- Wattage from the plug test utilising the correct version of Cinebench for a better comparison.

- Hard wired USB to RJ45 dongle.

- The search for faster network connectivity?

- Sharing the partial knowledge of the rear 100 pin Molex connect's pin outs.

- Sharing the partial knowledge of the mezzanine connectors pin outs.

- Possibly even attempting to make a mezzanine to PCI-E x1 connector (with the hope of being able to run a PCI-E x1 Network adaptor to get faster LAN speeds)

MINI UPDATE 18/02/21 :

All my opened phone browser tabs got unintentionally closed when I some how, managed to download 'Barcode Scanner' which was actually a pop up browser advert malware piece of s**t. So I'm going to need to re-research the four or five tabs I had open with the mezzanine information. It's a little annoying but really I should of posted this information and then I wouldn't of lost it, so I've only got myself to blame. Thought for the day, "Don't put off doing something today!".

As for the Blade, it's up and running daily. My son and his buddy's are on it (aka Minecraft server). I've been looking into custom building a 4U rack server but I really cannot find anything in a similar price range to this Blade. 64GB of new DDR3 or DDR4 memory is about the total price of Project Blade, let alone the rest of the components that I would need!

In terms of the Blade's exhaust fan, I've had just about enough of the noise from the 92mm fan. I managed to disassemble the server this morning before my son switched it on. I wanted to get the part numbers to research the fan that's currently in use, along with the others I've had previously installed. Then using this information, I plan to research and purchase a better exhaust fan. Here are my previous fans.

Current : Delta Electronics AUB0912VH, CFM 67.9, 45dB, 3800 RPM, 92mm

(31-37 degrees across all cores idle)

Originally : PC Cooler, CFM 22.5, 22dB, 2000 RPM, 110mm.

(I forget exactly, but it was mid 60's under load)

Experimented using : Cooler Master A12025-12CB-3BN-F1, CFM 44.03, 19.8dB, 1200 RPM, 120mm.

(This was initially looking promising, but again mid 60's under load)

So finally I had some actual data to act upon. No more trial and error with fans! The original fan size (110mm) was chosen to be the largest that I could possibly fit into the Blade housing. Unfortunately it was never going to meet the Blades cooling requirements. I had some hope for the Cooler Master fan, after all it was a bigger dimension fan. However it looked a little bit 'gawkie' perched on top of the Blade. It was no way near as nice looking as the thin 110mm original fan.

I remembered the phrase 'functionality over looks' and thought, well if it does the job.. then I'll stick with it. Unfortunately it too was never going to do the job required. So the Cooler Master was uninstalled and the 92mm Delta Electronic is back on the Blade again.

My online search for a better fan. Ironically fans between the sizes of 80mm and 120mm are kinda a bit niche. So I though I'd bite the bullet and go for a 'gawkie' looking 120mm fan plonked on the top of the Blade server. I wanted a minimum of CFM 70+, with a noise level 20-25dB. so I've just ordered this :

Can ya tell what it is yet?! - It's a

Noctua NF-P12-Redux, it's basic specs are CFM 120.2, 25dB, 1700 RPM, 120mm. So this thing should be fantastic, I'll fill you in once it arrives.

In the next update, I'll talk you through the three USB to RJ45 adaptors I've purchased from China, which one I prefer and would recommend.

USB RJ-45 ADAPTOR SHOOTOUT - 24/03/21 :

Admittedly I'm getting a bit lazy now with this project which is a shame because the server is running pretty much 24/7 and it's quiet. It's actually quieter than my D-Link 48 port Gigabit switch! It's doing everything that I'm asking it to do without any drama. It's really fantastic! However as I'm getting tied up with other projects, subsequently I'm neglecting Project Blade.. so tonight Matthew.. I pulled my finger out and done the necessary with the USB network adaptors!

Now lets not get ahead of our selves here!! I'm no rich You-Tuber flaunting his money. I purchased three USB to RJ45 adaptors, mainly because the first one was absolutely useless (you'll see later) and so when buying the second one, I also purchased the third one as the same time. So without further a-do, here's my adaptors in detail.

ADAPTER #1 - AVERAGE = P9, 5.03 DL + 5.49 UP :

The infamous first USB RJ45 adaptor that I purchased. I didn't really expect much but equally I certainly didn't expect the speeds that I got. Still, at least it was working and that in itself was a start.

Installation was a sinch, the USB adaptor also shows up as a CD-ROM drive, so simply installing the driver from the inbuilt CD-ROM and voila! Quick and easy.

I did worry myself about the maximum speed of USB 2.0 thou, and here's what Google says:

USB 2.0 provides a maximum throughput of up to 480Mbps. When coupled with a 1000Mbps Gigabit Ethernet adapter, a USB 2.0 enabled computer will deliver approximately up to twice the network speed when connected to a Gigabit Ethernet network as compared to the same computer using a 100Mbps Fast Ethernet port.

Thus, I was thinking... WTF!! Second and third adaptors ordered ;-)

ADAPTER #2 - AVERAGE = P8, 64.61 DL + 28.87 UP :

The second adaptor was chosen because although it was still USB 2.0 it also incorporated a three port USB hub which can be optionally powered to support power hungry devices.

I wasn't really worried about a USB 3.x device because I already knew that my Blade server doesn't have USB3 so I wasn't really looking for anything more than USB 2.0.

This second adaptor also uses the newer USB-C connector as it's main connector, although my apologies, it's hard to see on my own photo.

Installation of this device truly was plug and play, no device drivers were installed by me (possibly Windows 10 may of done that in the background? But if it did there were no notifications and it was up and running in seconds). I was much more happier using this network adaptor!!

ADAPTER #3 - AVERAGE = P8, 112.13 DL + 28.98 UP :

The final adaptor and well.. here is a turn out for the books. I would of never guessed that a USB 3.0 device would of out preformed a USB 2.0 device by so much. Especially when it's running from a USB 2.0 port! WOW!! Some difference.

Installation wasn't exactly plug and play, more like plug and prey.. then nothing, finally, looking in the packaging was one of those tiny CD-ROMs and after copying the driver across, success.. got it working and boy was it worth it!

Suffice to say this IS the network adaptor that is STILL plugged into the Blade server ;-)

So what's next?

To finish up this project I'm going to re-research all that information that I had lost from my phone, for the possibility of hooking up a PCI-E x1 network adaptor into the Mezzanine connector inside the Blade server. I feel that I owe it to this write up, the Blade server has justified itself with it's exemplary manner. Really I am surprised how good these things are for the money. To balance that comment out, yes their onboard GPU is poor and of course, they don't have any native network ports, which is problematic.

In addition, I've got this idea of rack mounting my Blade server. You'd of thought this would be easy, however the exhaust is now coming out of the top of the Blade and the Blade server itself is higher than a 1U slot and a little lower than a 2U slot so it will have to go into a 2U shelf. Maybe I could design this shelf to house two Blade servers? Let me know below in the comments what you think?

More to come (hopefully soon)..